Introduction

Autoencoders are a class of artificial neural networks that play a vital role in unsupervised deep learning. They are designed to learn efficient representations of data, typically for dimensionality reduction or feature learning. By compressing input data into a lower-dimensional space and then reconstructing it, autoencoders can discover underlying structures in the data without the need for labelled examples. Explores the function, architecture, and applications of autoencoders, while also highlighting their significance in Deep Learning Course and training programs in cities like Delhi.

What Are Autoencoders?

Autoencoders are pivotal in the realm of unsupervised deep learning, offering a powerful method for dimensionality reduction and feature extraction. By learning to encode data into a compact latent representation and then reconstruct it, autoencoders help reveal underlying structures within the data.

This unsupervised approach is particularly useful in applications like anomaly detection, image compression, and data denoising. Mastering autoencoders is crucial for those aiming to excel in fields like machine learning and data science, as they provide deeper insights into complex datasets.

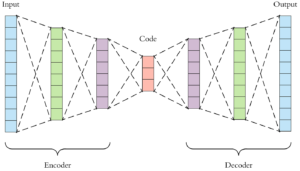

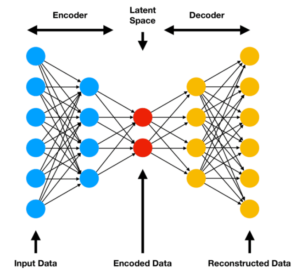

The encoder and the decoder are an autoencoder’s two main components. The encoder maps the input data to a latent space representation (usually of lower dimension), while the decoder reconstructs the original data from this representation.

Architecture of Autoencoders

| Component | Description |

| Input Layer | Takes the input data. |

| Encoder | Compresses the input into a latent space representation. |

| Latent Space | The compressed representation of the input. |

| Decoder | Reconstructs the data from the latent space. |

| Output Layer | Produces the reconstructed data. |

Input Layer → Encoder → Latent Space → Decoder → Output Layer

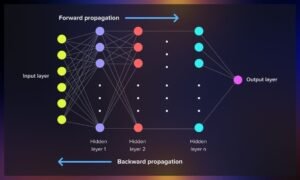

Training Process

Autoencoders are trained using a process called backpropagation, where the network learns to minimize the difference between the original input and the reconstructed output. This difference is quantified using a loss function, typically Mean Squared Error (MSE).

Loss Function

The loss function can be represented mathematically as:

Loss=1N∑i=1N(xi−x^i)2\text{Loss} = \frac{1}{N} \sum_{i=1}^{N} (x_i – \hat{x}_i)^2Loss=N1∑i=1N(xi−x^i)2

Where:

NNN = number of samples

xix_ixi = original input

x^i\hat{x}_ix^i = reconstructed output

Applications of Autoencoders

1. Dimensionality Reduction

Autoencoders can reduce the dimensionality of data while preserving its essential features. This is particularly useful in scenarios where high-dimensional data can hinder analysis, such as image and text data.

2. Anomaly Detection

Identification of anomalies by measuring the reconstruction error is possible by autoencoders. If the reconstruction error exceeds a certain threshold, the input data point is considered an anomaly.

3. Image Denoising

Denoising autoencoders are trained to remove noise from images. By learning to reconstruct clean images from noisy inputs, these models enhance image quality in applications like computer vision.

4. Generative Models

Variational autoencoders (VAEs) extend traditional autoencoders to generate new data points similar to the training set. This has applications in image generation, data augmentation, and more.

Training Data

| Dataset | Number of Samples | Dimensions | Use Case |

| MNIST | 70,000 | 28×28 pixels | Handwritten digit recognition |

| CIFAR-10 | 60,000 | 32×32 pixels | Image classification |

| UCI Adult Dataset | 48,842 | 14 attributes | Anomaly detection |

Autoencoders in Deep Learning Training

Understanding autoencoders is crucial for anyone pursuing a career in data science or artificial intelligence. Deep Learning Courses often include modules focused on unsupervised learning techniques, with autoencoders being a key topic.

These programs provide hands-on experience with real-world datasets, allowing students to apply their knowledge and build practical skills. Participating in Deep Learning Training in Delhi offers aspiring data scientists the necessary skills to leverage autoencoders effectively, making them invaluable assets in the tech industry.

Deep Learning Training in Delhi offers comprehensive instruction in neural networks, computer vision, and AI techniques, providing hands-on experience to master deep learning models and their real-world applications.

Conclusion

Autoencoders are a powerful tool in unsupervised deep learning, enabling the efficient representation and reconstruction of data. Their applications range from dimensionality reduction and anomaly detection to image denoising and generative modelling. As the demand for skilled professionals in data science continues to grow, understanding autoencoders becomes increasingly important. By mastering these techniques, individuals can enhance their careers and contribute to the evolving landscape of artificial intelligence and machine learning.